Analysis of Ext3, Ext4 and XFS over networks in RAID Environment using Linux Operating System.

Minor Research Project

Funded by U.G.C.

F.No.:MS-5/102054/XII/14- 15/CRO

2015 - 2017

The basic idea behind RAID is to combine multiple small, inexpensive disk drives into an array to accomplish performance or redundancy goals not attainable with one large and expensive drive. This array of drives appears to the computer as a single logical storage unit or drive.

RAID allows information to access several disks. RAID uses techniques such as disk striping (RAID Level 0), disk mirroring (RAID Level 1), and disk striping with parity (RAID Level 5) to achieve redundancy, lower latency, increased bandwidth, and maximized ability to recover from hard disk crashes.

RAID consistently distributes data across each drive in the array. RAID then breaks down the data into consistently-sized chunks (commonly 32K or 64k, although other values are acceptable). Each chunk is then written to a hard drive in the RAID array according to the RAID level employed. When the data is read, the process is reversed, giving the illusion that the multiple drives in the array are actually one large drive.

System Administrators and others who manage large amounts of data would benefit from using RAID technology. Primary reasons to deploy RAID include:

The hardware-based array manages the RAID subsystem independently from the host. It presents a single disk per RAID array to the host.

A Hardware RAID device connects to the SCSI controller and presents the RAID arrays as a single SCSI drive. An external RAID system moves all RAID handling "intelligence" into a controller located in the external disk subsystem. The whole subsystem is connected to the host via a normal SCSI controller and appears to the host as a single disk.

RAID controller cards function like a SCSI controller to the operating system, and handle all the actual drive communications. The user plugs the drives into the RAID controller (just like a normal SCSI controller) and then adds them to the RAID controllers configuration, and the operating system won't know the difference.

Software RAID implements the various RAID levels in the kernel disk (block device) code. It offers the cheapest possible solution, as expensive disk controller cards or hot-swap chassis are not required. Software RAID also works with cheaper IDE disks as well as SCSI disks. With today's faster CPUs, Software RAID outperforms Hardware RAID.

The Linux kernel contains an MD driver that allows the RAID solution to be completely hardware independent. The performance of a software-based array depends on the server CPU performance and load.

RAID supports various configurations, including levels 0, 1, 4, 5, and linear. These RAID types are defined as follows:

Following is the screenshot of two RAID arrays which I have created at the time of Installation on RAID Level 5 and RAID Level 1 using three hard-disks of 7200RPM:

Following is the screenshot of two RAID arrays which I have created at the time of Installation on RAID Level 5 and RAID Level 1 using three hard-disks of 7200RPM:

Below is the screenshot of three additional RAID arrays which I have created for keeping the ext3, ext4, XFS file system along with the two raid arrays created at the time of installation :

Write speed of file systems using oflag=dsync in dd command can be analyzed from the following chart:

Here is the analysis of Read Speed of File systems:

Read Write Performance analysis for ext3, ext4 and xfs file systems in Networking

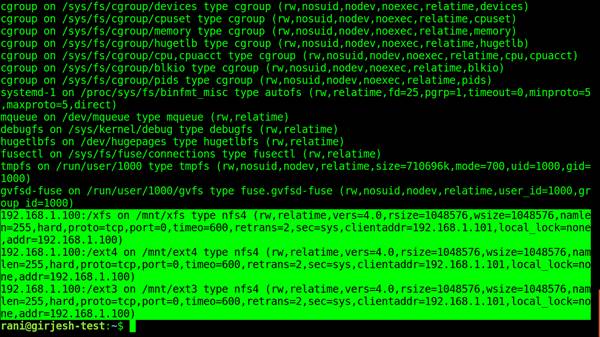

Below is the screenshot of mounted partitions of server machine in the client:

Here is the analysis of ext3, ext4 and xfs for read speed:

Here is the analysis of ext3, ext4 and xfs for write speed:

Here is the summary of speed in MB/s to write on ext3, ext4 and xfs file systems(using switch):

Here is the summary of speed in MB/s to write on ext3, ext4 and xfs file systems using oflag=dsync:

Here is the summary of speed in MB/s to write on ext3, ext4 and xfs file systems using oflag=dsync:

Scalability check using LVM for ext3, ext4 and xfs

This is the total scheme of logical volumes which I have maintained in my analysis:

This is the total scheme of logical volumes which I have maintained in my analysis:

Screen below showing all logical volumes mounted:

Following is the screenshot of lv-xfs logical volume formatted with xfs file system in which the resize gets failed:

Conclusion(contribution to the society)

Choosing the Red Hat Enterprise Linux filesystem that is appropriate for your application is often a non-trivial decision due to the large number of options available and the trade-offs involved. The new ext4 filesystem brings many new features and enhancements to ext3, making it a good choice for a variety of workloads. A tremendous amount of work has gone into bringing ext4 to Linux, with a busy roadmap ahead to finalize ext4 for production use. What was once essentially a simple filesystem has become an enterprise-ready solution, with a good balance of scalability, reliability, performance and stability. That is why, the ext3 user community will have the option to upgrade their filesystem and take advantage of the newest generation of the ext family.

In this project, I have summarized the read, write, network and scalability results of three mutually compatible file systems ext3, ext4 and xfs. Obtained results have confirmed majority of our expectations. A new, modern, 64-bit file system ext4 has shown superior characteristics when compared to its predecessor, ext3. Its performances is superior in all the test procedures. A number of innovative techniques for file allocation (extents, persistent pre-allocation, delayed allocation and multiblock allocator), and enhanced Htree indexing for larger directories which is always on, improved journaling techniques and buffer cache mechanism. This way ext4 file system has shown superior performances in comparison to its predecessor in difficult conditions, such as working with read/write operations that I mostly used in my experiments i.e. with RAID, Networks & LVM. It can be concluded that ext4 performs better than any of its predecessors, which means that journaling techniques combined with the cache mechanism, not only have not slow down the system, but improved its performance.

The obtained results are encouraging for all Linux users to use ext4 file system. If both your server and your storage device are large, XFS is likely to be the best choice. Even with smaller storage arrays, XFS performs very well when the average file sizes are large, for example hundreds of megabytes in size.

If your existing workload has performed well with Ext4, staying with Ext4 on Red Hat Enterprise Linux 6 or migrating to XFS on Red Hat Enterprise Linux 7 should provide you and your applications with a very familiar environment. Two key advantages of Ext4 over Ext3 on the same storage include faster filesystem check and repair times and higher streaming read and write performance on high-speed devices.

Another way to characterize this is that the Ext4 filesystem variants tend to perform better

on systems that have limited I/O capability. Ext3 and Ext4 perform better on limited bandwidth (< 200MB/s) and up to ~1,000 iops capability.

For anything with higher capability, XFS tends to be faster. XFS is a file system that was designed from day one for computer systems with large numbers of CPUs and large disk arrays. It focuses on supporting large files and good streaming I/O performance. It also has some interesting administrative features not supported by other Linux file systems.

XFS also consumes about twice the CPU-per-metadata operation compared to Ext3 and Ext4, so if you have a CPU-bound workload with little concurrency, then the Ext3 or Ext4 variants will be faster. In general Ext3 or Ext4 is better if an application uses a single read/write thread and small files, while XFS shines when an application uses multiple read/write threads and bigger files. We recommend that you measure the performance of your specific application on your target server and storage system to make sure you choose the appropriate type of filesystem. If data is large, then nothing better than XFS, which can be easily seen from the support of maximum limits it provides :

Limit |

ext3 |

ext4 |

XFS |

max file system size |

16 TiB |

16 TiB |

16 EiB |

max file size |

2 TiB |

16 TiB |

8 EiB |

max extent size |

4 kiB |

128 MiB |

8 GiB |

max extended attribute size |

4 kiB |

4 kiB |

64 kiB |

max inode number |

232 |

232 |

264 |